No: ‘Noindex’ Detected in ‘Robots’ Meta Tag: Guide To Understanding & Fixing the Issue

Marketing IBTI#Marketing IBTI

Marketing IBTI#Marketing IBTI

In the world of website optimization, the statement “No: ‘Noindex’ Detected in ‘Robots’ Meta Tag” might worry digital enthusiasts and webmasters alike. Figuring out what this phrase means is important because it hints at a potential problem with your website’s visibility on search engines.

Here’s a quick overview: This phrase means that certain pages on your website are set not to appear in search engine results using the ‘noindex’ command in the ‘robots’ meta tag. To solve this, you’ll need to edit your website’s HTML to remove the ‘noindex’ instruction, make sure the robots.txt file doesn’t accidentally block crawlers, and fix any conflicts caused by plugins or themes adding ‘noindex’ tags.

In this guide, we’ll explore the details of ‘noindex’ and ‘robots’ meta tags, offering practical insights and step-by-step actions to understand and fix this issue. Our goal is to help your online presence thrive in the competitive digital world.

Understanding the ‘Noindex’ and ‘Robots’ Meta Tag

When you see the message “No: ‘Noindex’ Detected in ‘Robots’ Meta Tag,” it means that the webpage in question has been configured to tell search engines not to index its content.

Let’s break it down:

- ‘Noindex’: This is a directive used in a webpage’s “robots” meta tag. It instructs search engine crawlers not to include that specific page’s content in their index, meaning the page won’t appear in search engine results.

Picture search engine crawlers, such as Googlebot, as internet explorers. They follow links, discover web pages, and create a list. But when they bump into a page with a ‘noindex’ tag, they politely skip adding it to their list. This means that the page won’t pop up in search results.

- ‘Robots’ Meta Tag: The “robots” meta tag is a piece of HTML code that webmasters use to provide instructions to search engine bots about how they should crawl and index the content of a webpage.

So, when you see this phrase,” it’s telling you that the webpage has been set up to prevent search engines from including it in their search results. This could be intentional, such as for private or temporary pages that aren’t meant to be publicly accessible through search engines.

Common Reasons for Noindex Detection

Let’s get into the details of how to find problems with ‘noindex’ on your website and what causes them. There are a few common reasons:

- On Purpose or by Mistake: Sometimes, people purposely decide not to show certain pages in search results using something called ‘noindex.’ But other times, this ‘noindex’ thing is added by mistake, especially when building or updating a website.

- Plugins and Themes: Extra elements you add to your website, like plugins or themes, might automatically put ‘noindex’ on some pages.

- Updates and Settings: When you update your website or change some settings, the system managing your content (CMS) might also add ‘noindex’ without you knowing.

- Robots.txt Mistakes: Another way ‘noindex’ shows up is if there are mistakes in a file called ‘robots.txt,’ which tells search engines what to look for on your site.

How to Identify Noindex Issues on Your Site

Let’s figure out if there are ‘noindex’ issues on your site:

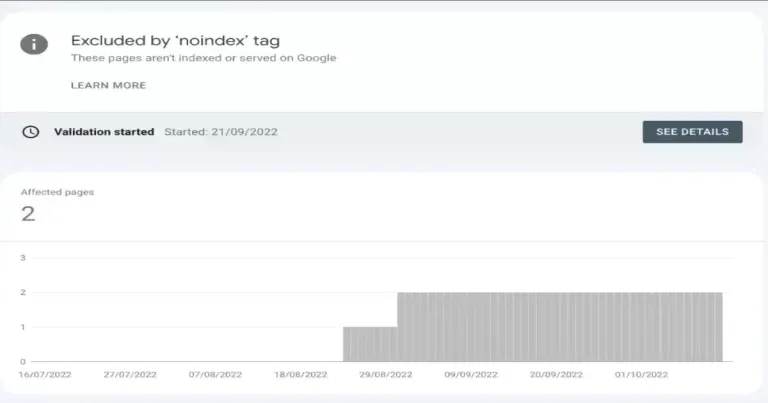

- Google Search Console: It’s a great tool that gives you reports on what pages are not showing up in searches because of ‘noindex.’ You can see which pages are being left out.

- Manually Check the Code: Take a look at the code of your pages. Find something called the ‘robots meta tag.’ If it says ‘noindex,’ that’s why the page isn’t showing in search results.

- Use SEO Tools: Tools like SEMrush, Ahrefs, or SEO-GO can also help you find these ‘noindex’ issues on your website. They make it easier to see what’s going on with your pages and how search engines are treating them.

Ways to Resolve Noindex Issues

Let’s fix the ‘noindex’ issues once you’ve found them:

- Edit Website Code: Change your website’s HTML to remove the ‘noindex’ instruction from the ‘robots’ tag. This tag tells search engines whether to include the page in search results.

- Check Robots.txt file: Make sure a file called ‘robots.txt’ isn’t stopping search engines from looking at your pages by mistake. It’s like a sign telling search engines where they can and can’t go on your site.

- CMS Settings Check: Look at the settings of the system managing your content (CMS). Check for anything that might be causing ‘noindex’ problems and fix it.

- Plugin and Theme Issues: If you added extra elements like plugins or themes, they might be telling search engines not to show your pages. Find and fix any conflicts caused by these extra things.

Ensuring Correct Indexing

Making sure your website shows up correctly in search results is important after fixing ‘noindex’ issues. Here’s how you can do it:

- Submit Sitemap Again: Give Google a map of your website by resubmitting your site’s sitemap to Google Search Console. It helps search engines understand your site structure.

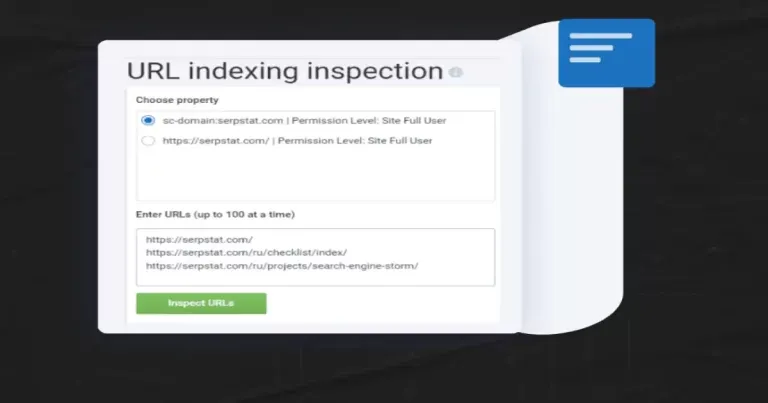

- Use “Fetch as Google” or “URL Inspection” Tool: Ask Google to take another look at your pages using tools like “Fetch as Google” or “URL Inspection.” It’s like inviting Google to visit your pages again and update its information about them.

- Check Google Search Console: Keep an eye on your website’s status in Google Search Console. It tells you if Google is properly including your pages in search results. Monitoring this helps ensure everything is working as it should.

Do you know why local businesses should use local hosting? Visit our informative blog to learn the reasons.

Tips on Adopting Best Practices for the Website Indexing

To make sure your website works well in search engines, here are some good things to do:

- Use ‘Noindex’ Wisely: If there are pages you don’t want to appear in search results, use ‘noindex.’ But for pages you want search engines to see, use ‘index.’ It’s like telling search engines which pages are important.

- Update Your Sitemap Often: Keep the map of your website (sitemap) up to date. Add new pages and change information when needed. This helps search engines quickly find and understand your content.

- Be Smart with Your Website’s Size: If your site has many low-quality or not-so-important pages, consider getting rid of them. This helps search engines use their time and energy better when checking your site.

- Make Your Website Easy to Navigate: Help search engines and visitors by organizing your site well. Use clear web addresses (URLs), link pages logically, and create easy-to-use menus. This makes it simpler for everyone to find what they’re looking for.

Do you want to know about hosting services for websites? Visit our insightful blog to get a thorough understanding.

Contact a Pro

For professional help in keeping an eye on your website, choose IBTI. Our IBTI Monitor platform ensures your website is always up and running smoothly. We offer features like —

- uptime monitors to make sure your site is always available,

- heartbeat monitoring for checking regular tasks,

- customizable status pages to show how your site is doing,

- incident tracking to notify you quickly, connecting a custom domain for a personalized touch,

- and instant email alerts.

IBTI Monitor gives you real-time updates on your website’s health, any past issues, and when your domain needs renewal. Start using IBTI Monitor today to know about problems and keep your online presence in great shape.

Wrapping Up

To sum it up, figuring out the puzzle of “No: ‘Noindex’ Detected in ‘Robots’ Meta Tag” is really important for keeping a good online presence. This guide shared tips on understanding and fixing the issue, highlighting how essential it is to index your website properly.

By following the simple steps mentioned, like finding ‘noindex’ problems and doing things the right way, you can manage how well your website shows up on search engines. Taking control of these ‘noindex’ issues ensures your important stuff gets seen by people searching online.

FAQs

What is a Robots.txt Generator?

A Robots.txt Generator is a tool that helps create the robots.txt file. This file provides instructions to search engine bots about which pages or sections should not be crawled or indexed.

How to remove the ‘noindex’ tag on WordPress?

To remove the ‘noindex’ tag in WordPress, log in to your WordPress dashboard, go to the page or post you want to index, and edit the page settings. In the ‘Advanced’ or ‘SEO’ section, look for the ‘noindex’ option and deselect it. Save your changes, and the page will be eligible for search engine indexing, enhancing its visibility on search results.

How do I fix the blocked robots meta tag in WordPress?

To fix a blocked robots meta tag in WordPress, go to “Settings > Reading” in your site’s Dashboard, and ensure that the option “Discourage search engines from indexing this site” is unchecked. This action allows search engines to index your site and resolves the issue of a blocked robots meta tag.

Marketing IBTI

#Marketing IBTIIBTI is a technology company that develops IT-as-a-service solutions and provides technical teams for software development. We have been working with IT services for over 12 years, developing software and mobile applications for clients throughout Brazil. In recent years, we have engaged in an internationalization process and started to serve foreign customers, always with a view to the quality of the service provided.